1 适用场景

该文档适用于如下场景进行产品扩容:

- 场景一:单节点扩容到三节点

- 场景二:单节点扩容到两节点

- 场景三:两节点扩容到三节点

- 场景四:三节点扩容到三节点以上

2 扩容说明

平台资源扩容主要指Kubernetes集群及Proton组件扩容,涉及如下内容:

• Kubernetes

• proton mariadb

• proton mongodb

• proton redis

• kafka

• zookeeper

• proton mq nsq

• proton policy engine

• proton-etcd

• nebula

说明:

- opensearch无需进行扩容

- 上述需扩容资源除proton-etcd外均通过proton-cli进行扩容

- kafka通过proton-cli进行副本扩容后,还需要修改数据副本

- nebula暂不支持扩容

- 上述组件均为proton内置组件,外置组件若需扩容请额外处理

文档中的扩容操作主要针对如下部署场景,若存在其他特殊场景请先与研发团队进行确认再进行扩容

- 单节点单master集群,所有服务均为单副本

- 两节点单master集群,所有服务均为单副本

- 三节点单master集群,所有服务均为单副本

- 三节点三master集群,所有服务均为三副本(全新部署三节点环境时默认设置为非三副本的服务除外)

集群master个数建议设置为奇数,最大推荐设置为3master。

多站点场景的扩容需要分别针对总站点和分站点进行扩容,扩容方式与单站点扩容相同。

2.1 其他说明

- 若对象存储(eceph)为独立部署,请参考对象存储独立部署与升级指导手册进行扩容

- 单节点扩容到两节点主要扩容对象为eceph

- 若未安装可观测性服务,则可跳过修改kafka数据副本(章节3.1.3)

3 准备工作

注意:扩容的节点(即新购机器)需要提前进行如下准备工作

3.1 安装操作系统

3.2 配置网络

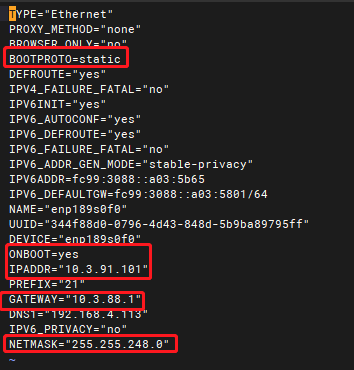

3.2.1 配置节点ip

注意:节点ip需要与当前集群处于同一网段

- 打开配置文件:

vi /etc/sysconfig/network-scripts/ifcfg-{网卡名称}(网卡名称以实际为准) - 修改

BOOTPROTO = static;ONBOOT = yes;配置IPADDR,NETMASK,GATEWAY,请根据实际环境填写网络信息,下图仅为示例:

- 保存退出

- 重启网络:

systemctl restart network

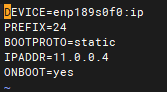

3.2.2 配置内部ip

注意:

- 若当前集群未使用内部ip,可跳过该章节

- 配置的内部ip与已有集群节点内部ip网段需保持一致

- 内部ip请不要配置D类地址(224.0.0.0 ~ 239.255.255.255)

- 在/etc/sysconfig/network-scripts/ 下新增配置文件ifcfg-{网卡名称}:ip(网卡名称以实际为准),新增配置文件中添加DEVICE、PREFIX、BOOTPROTO、IPADDR、ONBOOT信息,网卡、ip、前缀请根据实际环境填写,示例如下:

- 保存退出

- 重启网络,执行

systemctl restart network或重启系统

3.3 设置主机名

执行如下命令,设置hostname:hostnamectl set-hostname {主机名称}

3.4 设置免密

注意:需要设置当前master免密登录到所有待扩容节点

在当前master节点依次执行命令设置免密登录到扩容节点:ssh-copy-id {ssh_id},输入密码

3.5 安装依赖

注意:需要安装与当前集群节点版本一致的依赖,若当前节点上的依赖包已被删除,重新下载对应版本依赖包进行解压即可

- 在当前master节点,进入依赖包解压目录

- 执行命令安装依赖:

./install_deps.sh --hosts {ssh_ip1},{ssh_ip2}(若需要融合部署eceph,安装依赖命令为:./install_deps.sh --hosts {ssh_ip1},{ssh_ip2} --with-eceph-remote {ssh_ip1},{ssh_ip2})

注意:ssh_ip1、ssh_ip2为待扩容节点

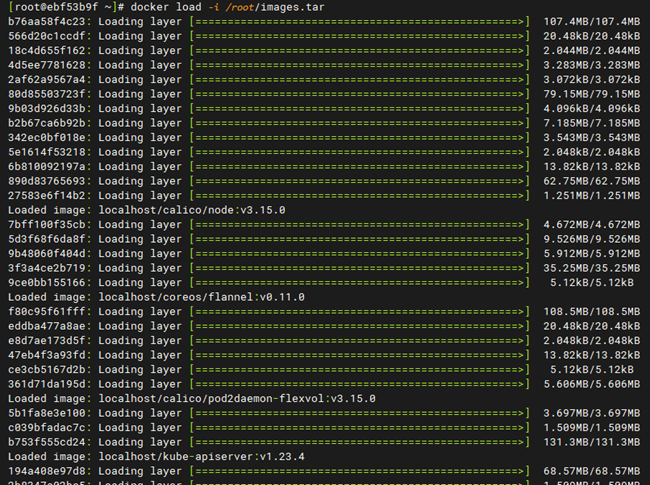

3.6 导入本地镜像(可选)

注意:若生产环境新部署时为proton 2.7.0(对应:AnyShare 7.0.4.8、AnyRobot 5.0.1.7、AnyDATA 2.0.1.8)及以后版本,则可跳过该章节;若环境为之前版本升级上来的,则需要按照如下步骤提前导入本地镜像(AnyFabric1.0.0.0对应proton 2.8.0,因此扩容时无需执行该章节)

第1步 在当前集群已有节点上执行:scp /usr/share/proton-cs/images.tar root@{待加入的新节点IP}:/root

第2步 在待加入的新节点上执行:docker load -i /root/images.tar,导入本地镜像

注意:上述步骤需要依次在每个待加入集群的新节点上执行

3.7 其他检查(可选)

请确认当前环境是否运行过补丁,并检查补丁镜像是否推送到registry仓库

docker images | grep {镜像名称}- 若存在两个同名镜像且镜像id不同,则需要将当前服务正在使用的镜像推送到registry仓库

- 执行命令推送镜像:

docker push registry.aishu.cn/{镜像路径}:{镜像tag}

注意:所有扩容操作均在当前集群master节点执行,proton-cli命令需要在依赖包解压目录下执行

4 单节点扩容到三节点

该场景下,原有环境集群节点为单节点,所有服务为单副本

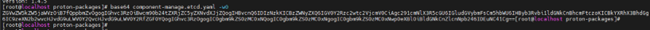

- 获取配置,执行:

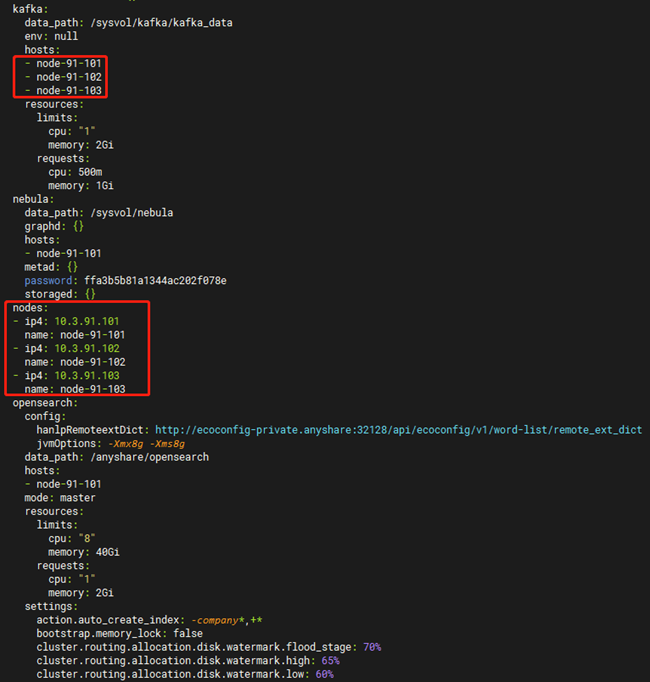

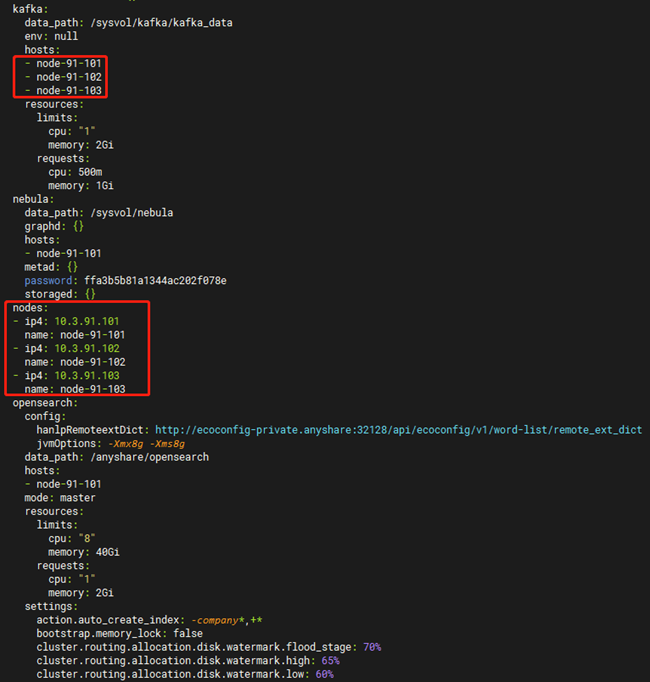

proton-cli get conf > cluster.yaml - 编辑配置文件,编辑后保存(配置文件示例如下)

- 进行平台资源扩容,执行:

proton-cli apply -f cluster.yaml - 等待执行完成,确认扩容结果

# 注:

# 1、该配置文件仅为示例说明,最终以实际环境中获取到的配置为准,配置文件中不涉及修改的组件无需关注

# 2、配置文件中节点ip、内部ip(若未使用内部ip,无需加上internal_ip这行配置)、hostmname信息请根据实际环境填写

# 3、单节点单master环境中数据服务均为单副本,扩容到三节点三master后,服务副本数与全新部署的三节点环境保持一致(nebula除外)

# 4、各服务hosts列表中的节点名称必须包含在nodes列表内

# 5、opensearch不扩容,proton-etcd之后单独执行扩容

# 6、若获取到的配置文件中不存在grafana和prometheus,则忽略这两个组件的修改

apiVersion: v1

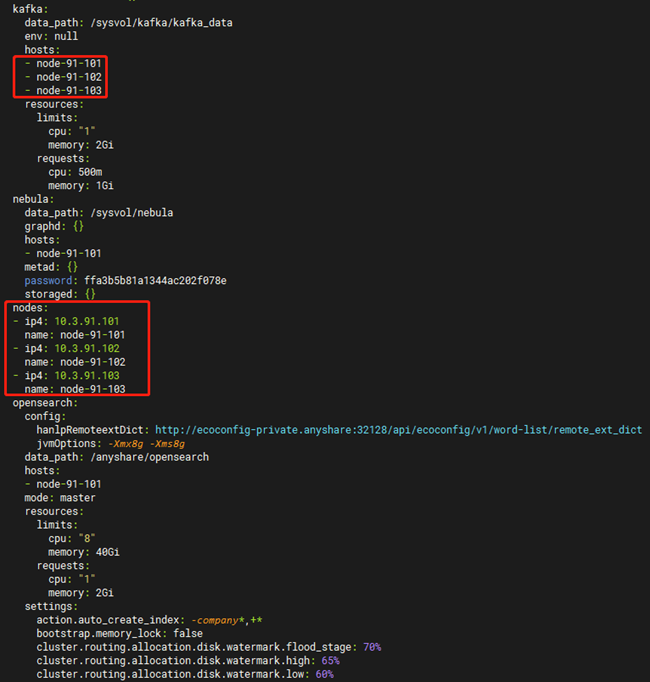

nodes:

- internal_ip: 11.0.0.4

ip4: 10.3.91.101

name: node-91-101

- internal_ip: 11.0.0.29 # 新增节点,填写内部ip、节点ip、hostname信息

ip4: 10.3.91.102

name: node-91-102

- internal_ip: 11.0.0.30 # 新增节点,填写内部ip、节点ip、hostname信息

ip4: 10.3.91.103

name: node-91-103

cms: {}

cr:

local:

ha_ports:

chartmuseum: 15001

cr_manager: 15002

registry: 15000

rpm: 15003

hosts:

- node-91-101

- node-91-102 # 新增node-91-102将容器仓库扩容到两节点

ports:

chartmuseum: 5001

cr_manager: 5002

registry: 5000

rpm: 5003

storage: /sysvol/proton_data/cr_data

cs:

addons:

- node-exporter

- kube-state-metrics

cs_controller_dir: ./service-package

docker_data_dir: /sysvol/proton_data/cs_docker_data

etcd_data_dir: /sysvol/proton_data/cs_etcd_data

ha_port: 16643

host_network:

bip: 172.33.0.1/16

pod_network_cidr: 192.169.0.0/16

service_cidr: 10.96.0.0/12

ipFamilies:

- IPv4

master:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将kubernetes扩容到3 master

- node-91-103

provisioner: local

installer_service: {}

grafana:

data_path: /sysvol/grafana

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

kafka:

data_path: /sysvol/kafka/kafka_data

env: null

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将kafka扩容到3副本

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

nebula:

data_path: /sysvol/nebula

graphd: {}

hosts:

- node-91-101

metad: {}

password: ffa3b5b81a1344ac202f078e

storaged: {}

opensearch:

config:

hanlpRemoteextDict: http://ecoconfig-private.anyshare:32128/api/ecoconfig/v1/word-list/remote_ext_dict

jvmOptions: -Xmx8g -Xms8g

data_path: /anyshare/opensearch

hosts:

- node-91-101

mode: master

resources:

limits:

cpu: "8"

memory: 40Gi

requests:

cpu: "1"

memory: 2Gi

settings:

action.auto_create_index: -company*,+*

bootstrap.memory_lock: false

cluster.routing.allocation.disk.watermark.flood_stage: 70%

cluster.routing.allocation.disk.watermark.high: 65%

cluster.routing.allocation.disk.watermark.low: 60%

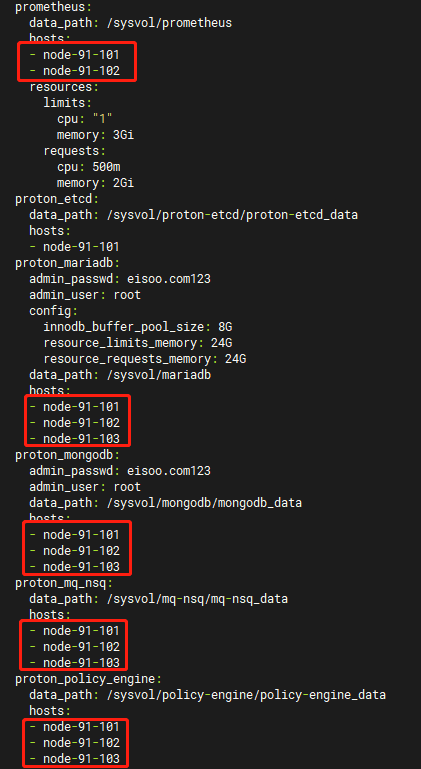

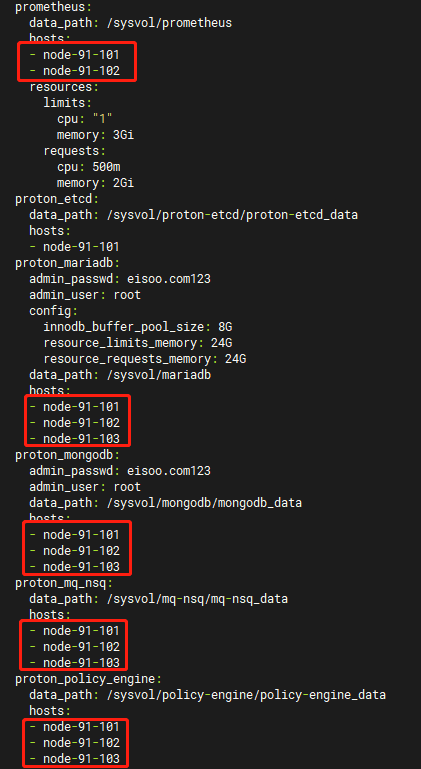

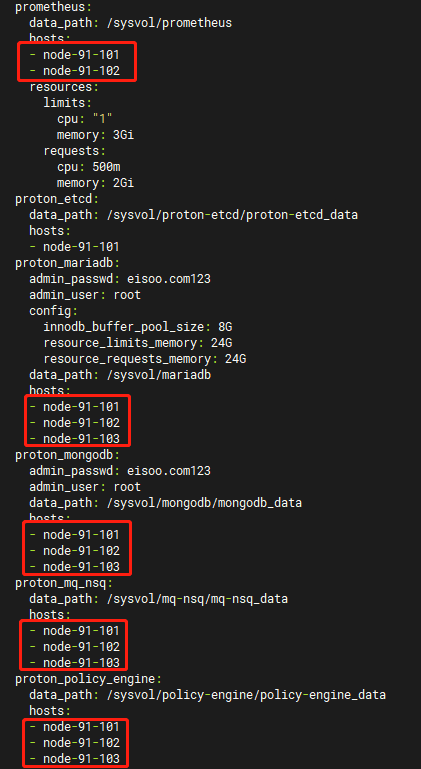

prometheus:

data_path: /sysvol/prometheus

hosts:

- node-91-101

- node-91-102 # 增加node-91-102将prometheus扩容到2副本

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

proton_etcd:

data_path: /sysvol/proton-etcd/proton-etcd_data

hosts:

- node-91-101

proton_mariadb:

admin_passwd: eisoo.com123

admin_user: root

config:

innodb_buffer_pool_size: 8G

resource_limits_memory: 24G

resource_requests_memory: 24G

data_path: /sysvol/mariadb

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mariadb扩容到3副本

- node-91-103

proton_mongodb:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/mongodb/mongodb_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mongodb扩容到3副本

- node-91-103

proton_mq_nsq:

data_path: /sysvol/mq-nsq/mq-nsq_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mq-nsq扩容到3副本

- node-91-103

proton_policy_engine:

data_path: /sysvol/policy-engine/policy-engine_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-policy-engine扩容到3副本

- node-91-103

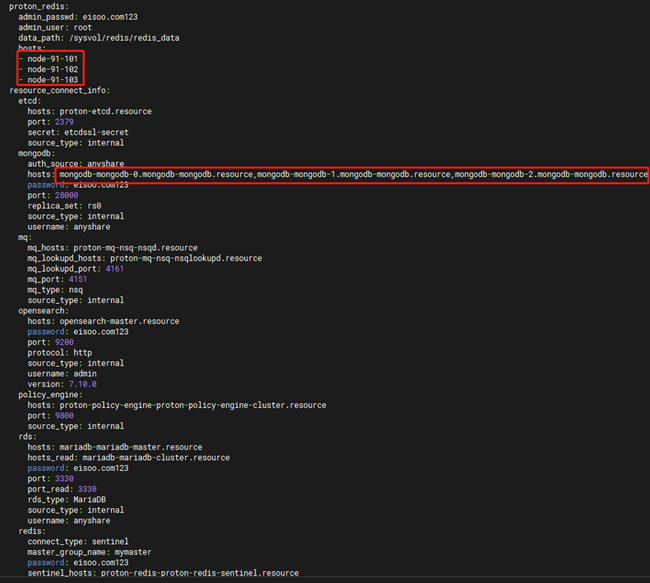

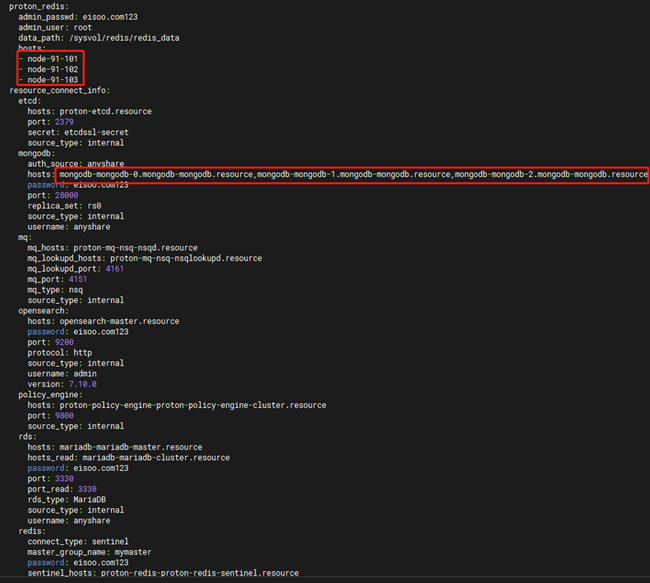

proton_redis:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/redis/redis_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-redis扩容到3副本

- node-91-103

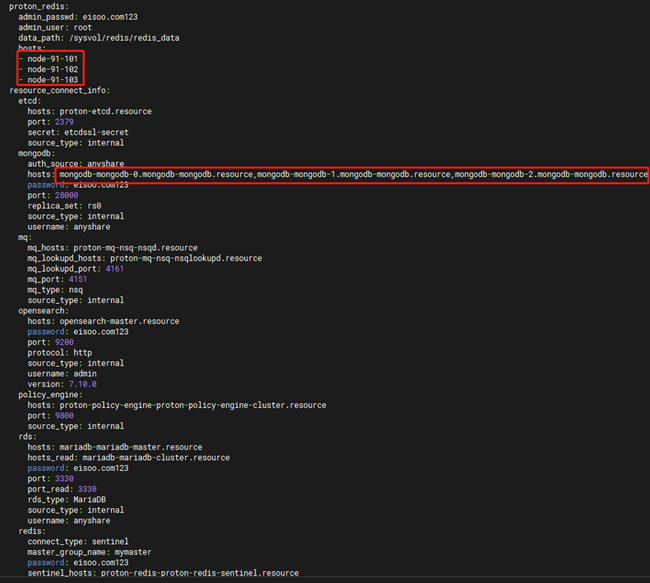

resource_connect_info:

etcd:

hosts: proton-etcd.resource

port: 2379

secret: etcdssl-secret

source_type: internal

mongodb:

auth_source: anyshare

hosts: mongodb-mongodb-0.mongodb-mongodb.resource,mongodb-mongodb-1.mongodb-mongodb.resource,mongodb-mongodb-2.mongodb-mongodb.resource

# mongodb.hosts更新为多副本连接

password: eisoo.com123

port: 28000

replica_set: rs0

source_type: internal

username: anyshare

mq:

mq_hosts: proton-mq-nsq-nsqd.resource

mq_lookupd_hosts: proton-mq-nsq-nsqlookupd.resource

mq_lookupd_port: 4161

mq_port: 4151

mq_type: nsq

source_type: internal

opensearch:

hosts: opensearch-master.resource

password: eisoo.com123

port: 9200

protocol: http

source_type: internal

username: admin

version: 7.10.0

policy_engine:

hosts: proton-policy-engine-proton-policy-engine-cluster.resource

port: 9800

source_type: internal

rds:

hosts: mariadb-mariadb-master.resource

hosts_read: mariadb-mariadb-cluster.resource

password: eisoo.com123

port: 3330

port_read: 3330

rds_type: MariaDB

source_type: internal

username: anyshare

redis:

connect_type: sentinel

master_group_name: mymaster

password: eisoo.com123

sentinel_hosts: proton-redis-proton-redis-sentinel.resource

sentinel_password: eisoo.com123

sentinel_port: 26379

sentinel_username: root

source_type: internal

username: root

zookeeper:

data_path: /sysvol/zookeeper/zookeeper_data

env: null

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将zookeeper扩容到3副本

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

docker_data_dir: /sysvol/proton_data/cs_docker_data

etcd_data_dir: /sysvol/proton_data/cs_etcd_data

ha_port: 16643

host_network:

bip: 172.33.0.1/16

pod_network_cidr: 192.169.0.0/16

service_cidr: 10.96.0.0/12

ipFamilies:

- IPv4

master:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将kubernetes扩容到3 master

- node-91-103

provisioner: local

installer_service: {}

grafana:

data_path: /sysvol/grafana

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

kafka:

data_path: /sysvol/kafka/kafka_data

env: null

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将kafka扩容到3副本

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

nebula:

data_path: /sysvol/nebula

graphd: {}

hosts:

- node-91-101

metad: {}

password: ffa3b5b81a1344ac202f078e

storaged: {}

opensearch:

config:

hanlpRemoteextDict: http://ecoconfig-private.anyshare:32128/api/ecoconfig/v1/word-list/remote_ext_dict

jvmOptions: -Xmx8g -Xms8g

data_path: /anyshare/opensearch

hosts:

- node-91-101

mode: master

resources:

limits:

cpu: "8"

memory: 40Gi

requests:

cpu: "1"

memory: 2Gi

settings:

action.auto_create_index: -company*,+*

bootstrap.memory_lock: false

cluster.routing.allocation.disk.watermark.flood_stage: 70%

cluster.routing.allocation.disk.watermark.high: 65%

cluster.routing.allocation.disk.watermark.low: 60%

prometheus:

data_path: /sysvol/prometheus

hosts:

- node-91-101

- node-91-102 # 增加node-91-102将prometheus扩容到2副本

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

proton_etcd:

data_path: /sysvol/proton-etcd/proton-etcd_data

hosts:

- node-91-101

proton_mariadb:

admin_passwd: eisoo.com123

admin_user: root

config:

innodb_buffer_pool_size: 8G

resource_limits_memory: 24G

resource_requests_memory: 24G

data_path: /sysvol/mariadb

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mariadb扩容到3副本

- node-91-103

proton_mongodb:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/mongodb/mongodb_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mongodb扩容到3副本

- node-91-103

proton_mq_nsq:

data_path: /sysvol/mq-nsq/mq-nsq_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mq-nsq扩容到3副本

- node-91-103

proton_policy_engine:

data_path: /sysvol/policy-engine/policy-engine_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-policy-engine扩容到3副本

- node-91-103

proton_redis:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/redis/redis_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-redis扩容到3副本

- node-91-103

resource_connect_info:

etcd:

hosts: proton-etcd.resource

port: 2379

secret: etcdssl-secret

source_type: internal

mongodb:

auth_source: anyshare

hosts: mongodb-mongodb-0.mongodb-mongodb.resource,mongodb-mongodb-1.mongodb-mongodb.resource,mongodb-mongodb-2.mongodb-mongodb.resource

# mongodb.hosts更新为多副本连接

password: eisoo.com123

port: 28000

replica_set: rs0

source_type: internal

username: anyshare

mq:

mq_hosts: proton-mq-nsq-nsqd.resource

mq_lookupd_hosts: proton-mq-nsq-nsqlookupd.resource

mq_lookupd_port: 4161

mq_port: 4151

mq_type: nsq

source_type: internal

opensearch:

hosts: opensearch-master.resource

password: eisoo.com123

port: 9200

protocol: http

source_type: internal

username: admin

version: 7.10.0

policy_engine:

hosts: proton-policy-engine-proton-policy-engine-cluster.resource

port: 9800

source_type: internal

rds:

hosts: mariadb-mariadb-master.resource

hosts_read: mariadb-mariadb-cluster.resource

password: eisoo.com123

port: 3330

port_read: 3330

rds_type: MariaDB

source_type: internal

username: anyshare

redis:

connect_type: sentinel

master_group_name: mymaster

password: eisoo.com123

sentinel_hosts: proton-redis-proton-redis-sentinel.resource

sentinel_password: eisoo.com123

sentinel_port: 26379

sentinel_username: root

source_type: internal

username: root

zookeeper:

data_path: /sysvol/zookeeper/zookeeper_data

env: null

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将zookeeper扩容到3副本

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

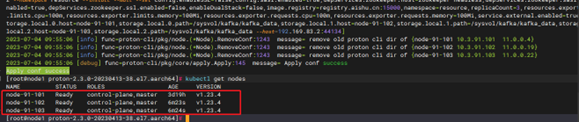

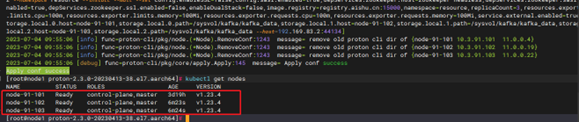

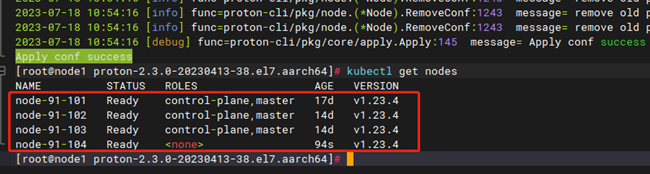

扩容结果确认

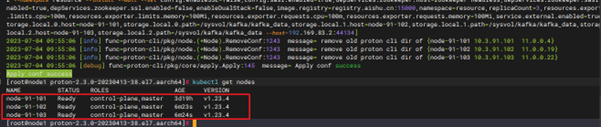

1.查看集群状态,三节点三master集群:kubectl get nodes

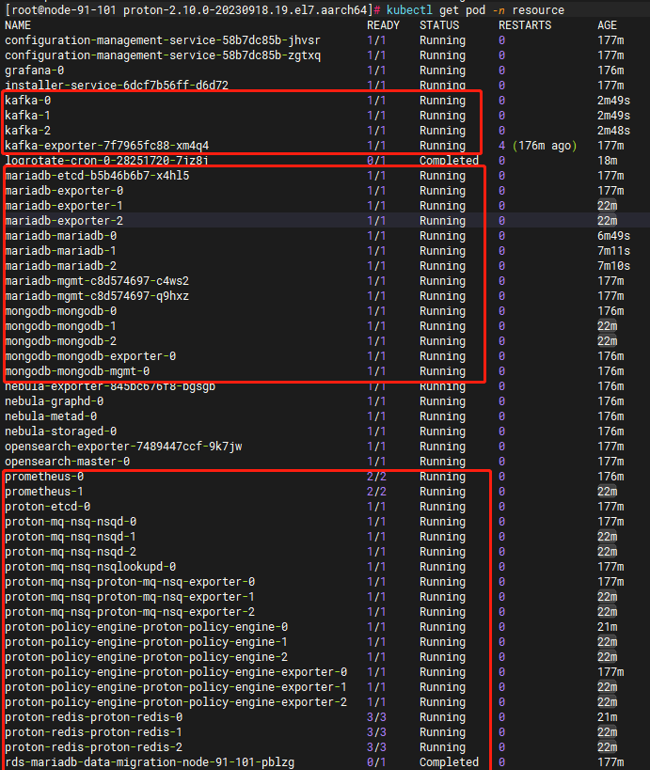

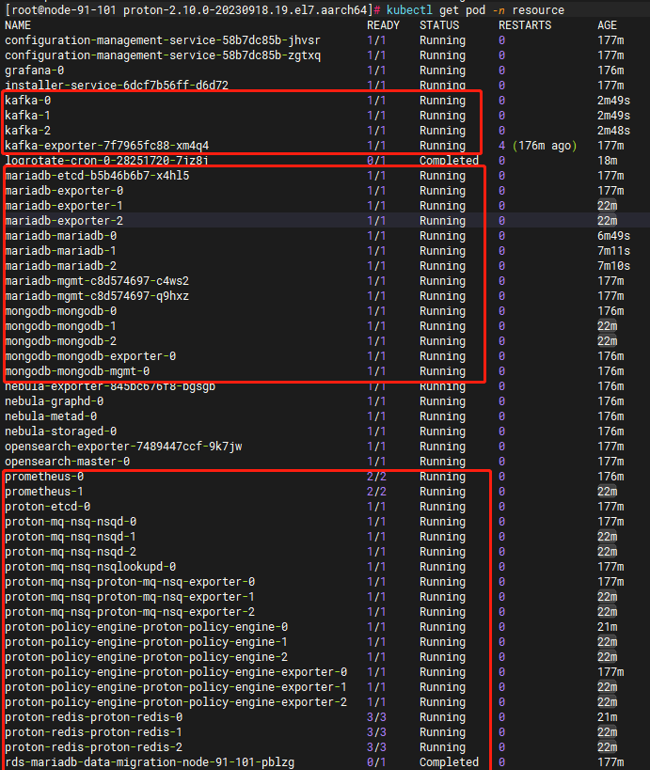

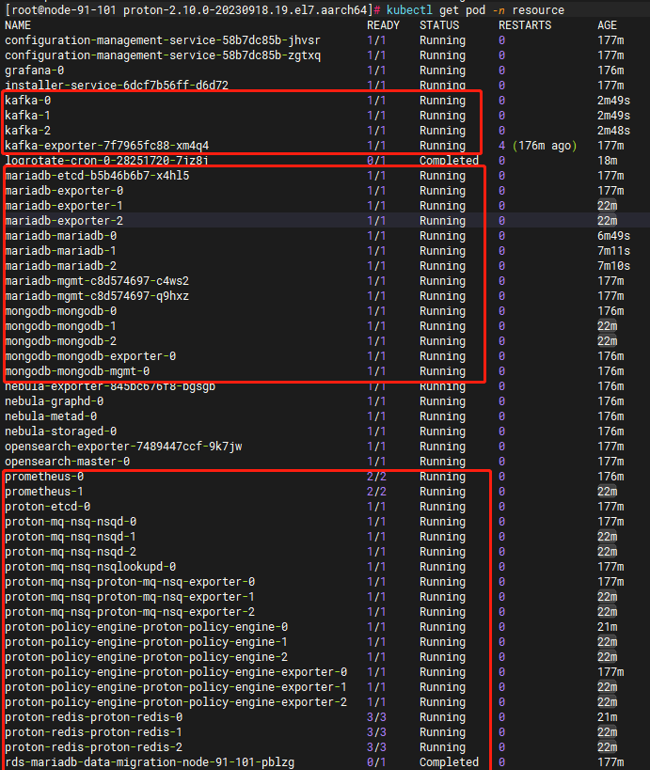

2.查看数据服务副本数及状态:kubectl get pod -n resource

proton-mariadb、proton-mongodb、proton-policy-engine、proton-mq-nsq、proton-redis、kafka、zookeeper均为3副本,且为running状态,(上述服务在进行数据同步过程中READY会显示为0/1,STATUS显示为Running,此时可继续进行后续操作,等待数据同步完成后READY会显示为1/1;处于Completed状态,READY显示为0/1的任务为正常现象)

- 查看集群配置:

proton-cli get conf

配置文件中节点为三节点,proton-mariadb、proton-mongodb、proton-policy-engine、proton-mq-nsq、proton-redis、kafka、zookeeper均显示为3副本

5 单节点扩容到两节点

该场景下,扩容主要针对对象存储(eceph)进行扩容,仅需要增加一个kubernetes的worker节点即可

- 获取配置,执行:

proton-cli get conf > cluster.yaml - 编辑配置文件,编辑后保存(配置文件示例如下)

- 进行平台资源扩容,执行:

proton-cli apply -f cluster.yaml - 等待执行完成,确认扩容结果

注:

# 1、该配置文件仅为示例说明,最终以实际环境中获取到的配置为准,配置文件中不涉及修改的组件无需关注

# 2、配置文件中节点ip、内部ip(若未使用内部ip,无需加上internal_ip这行配置)、hostmname信息请根据实际环境填写

# 3、单节点单master环境中数据服务均为单副本,扩容到两节点单master时,仅新增一个kubernetes的worker节点,其他服务不变

apiVersion: v1

nodes:

- internal_ip: 11.0.0.4

ip4: 10.3.91.101

name: node-91-101

- internal_ip: 11.0.0.29 # 新增节点,填写内部ip、节点ip、hostname信息

ip4: 10.3.91.102

name: node-91-102

cms: {}

cr:

local:

ha_ports:

chartmuseum: 15001

cr_manager: 15002

registry: 15000

rpm: 15003

hosts:

- node-91-101

ports:

chartmuseum: 5001

cr_manager: 5002

registry: 5000

rpm: 5003

storage: /sysvol/proton_data/cr_data

cs:

addons:

- node-exporter

- kube-state-metrics

cs_controller_dir: ./service-package

docker_data_dir: /sysvol/proton_data/cs_docker_data

etcd_data_dir: /sysvol/proton_data/cs_etcd_data

ha_port: 16643

host_network:

bip: 172.33.0.1/16

pod_network_cidr: 192.169.0.0/16

service_cidr: 10.96.0.0/12

ipFamilies:

- IPv4

master:

- node-91-101

provisioner: local

installer_service: {}

grafana:

data_path: /sysvol/grafana

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

kafka:

data_path: /sysvol/kafka/kafka_data

env: null

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

nebula:

data_path: /sysvol/nebula

graphd: {}

hosts:

- node-91-101

metad: {}

password: ffa3b5b81a1344ac202f078e

storaged: {}

opensearch:

config:

hanlpRemoteextDict: http://ecoconfig-private.anyshare:32128/api/ecoconfig/v1/word-list/remote_ext_dict

jvmOptions: -Xmx8g -Xms8g

data_path: /anyshare/opensearch

hosts:

- node-91-101

mode: master

resources:

limits:

cpu: "8"

memory: 40Gi

requests:

cpu: "1"

memory: 2Gi

settings:

action.auto_create_index: -company*,+*

bootstrap.memory_lock: false

cluster.routing.allocation.disk.watermark.flood_stage: 70%

cluster.routing.allocation.disk.watermark.high: 65%

cluster.routing.allocation.disk.watermark.low: 60%

prometheus:

data_path: /sysvol/prometheus

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

proton_etcd:

data_path: /sysvol/proton-etcd/proton-etcd_data

hosts:

- node-91-101

proton_mariadb:

admin_passwd: eisoo.com123

admin_user: root

config:

innodb_buffer_pool_size: 8G

resource_limits_memory: 24G

resource_requests_memory: 24G

data_path: /sysvol/mariadb

hosts:

- node-91-101

proton_mongodb:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/mongodb/mongodb_data

hosts:

- node-91-101

proton_mq_nsq:

data_path: /sysvol/mq-nsq/mq-nsq_data

hosts:

- node-91-101

proton_policy_engine:

data_path: /sysvol/policy-engine/policy-engine_data

hosts:

- node-91-101

proton_redis:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/redis/redis_data

hosts:

- node-91-101

resource_connect_info:

etcd:

hosts: proton-etcd.resource

port: 2379

secret: etcdssl-secret

source_type: internal

mongodb:

auth_source: anyshare

hosts: mongodb-mongodb-0.mongodb-mongodb.resource

password: eisoo.com123

port: 28000

replica_set: rs0

source_type: internal

username: anyshare

mq:

mq_hosts: proton-mq-nsq-nsqd.resource

mq_lookupd_hosts: proton-mq-nsq-nsqlookupd.resource

mq_lookupd_port: 4161

mq_port: 4151

mq_type: nsq

source_type: internal

opensearch:

hosts: opensearch-master.resource

password: eisoo.com123

port: 9200

protocol: http

source_type: internal

username: admin

version: 7.10.0

policy_engine:

hosts: proton-policy-engine-proton-policy-engine-cluster.resource

port: 9800

source_type: internal

rds:

hosts: mariadb-mariadb-master.resource

hosts_read: mariadb-mariadb-cluster.resource

password: eisoo.com123

port: 3330

port_read: 3330

rds_type: MariaDB

source_type: internal

username: anyshare

redis:

connect_type: sentinel

master_group_name: mymaster

password: eisoo.com123

sentinel_hosts: proton-redis-proton-redis-sentinel.resource

sentinel_password: eisoo.com123

sentinel_port: 26379

sentinel_username: root

source_type: internal

username: root

zookeeper:

data_path: /sysvol/zookeeper/zookeeper_data

env: null

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

扩容结果确认

查看集群状态,两节点单master集群:kubectl get nodes

6 两节点扩容到三节

该场景下,原有环境集群节点为两节点,master节点为1个,所有服务为单副本

- 获取配置,执行:

proton-cli get conf > cluster.yaml - 编辑配置文件,编辑后保存(配置文件示例如下)

- 进行平台资源扩容,执行:

proton-cli apply -f cluster.yaml - 等待执行完成,确认扩容结果

注:

# 1、该配置文件仅为示例说明,最终以实际环境中获取到的配置为准,配置文件中不涉及修改的组件无需关注

# 2、配置文件中节点ip、内部ip(若未使用内部ip,无需加上internal_ip这行配置)、hostmname信息请根据实际环境填写

# 3、两节点单master环境中数据服务均为单副本,扩容到三节点三master后,服务副本数与全新部署的三节点环境保持一致(nebula除外)

# 4、各服务hosts列表中的节点名称必须包含在nodes列表内

# 5、opensearch不扩容,proton-etcd之后单独执行扩容

# 6、若获取到的配置文件中不存在grafana和prometheus,则忽略这两个组件的修改

apiVersion: v1

nodes:

- internal_ip: 11.0.0.4

ip4: 10.3.91.101

name: node-91-101

- internal_ip: 11.0.0.29

ip4: 10.3.91.102

name: node-91-102

- internal_ip: 11.0.0.30 # 新增节点,填写内部ip、节点ip、hostname信息

ip4: 10.3.91.103

name: node-91-103

cms: {}

cr:

local:

ha_ports:

chartmuseum: 15001

cr_manager: 15002

registry: 15000

rpm: 15003

hosts:

- node-91-101

- node-91-102 # 新增node-91-102将容器仓库扩容到两节点

ports:

chartmuseum: 5001

cr_manager: 5002

registry: 5000

rpm: 5003

storage: /sysvol/proton_data/cr_data

cs:

addons:

- node-exporter

- kube-state-metrics

cs_controller_dir: ./service-package

docker_data_dir: /sysvol/proton_data/cs_docker_data

etcd_data_dir: /sysvol/proton_data/cs_etcd_data

ha_port: 16643

host_network:

bip: 172.33.0.1/16

pod_network_cidr: 192.169.0.0/16

service_cidr: 10.96.0.0/12

ipFamilies:

- IPv4

master:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将kubernetes扩容到3 master

- node-91-103

provisioner: local

installer_service: {}

grafana:

data_path: /sysvol/grafana

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

kafka:

data_path: /sysvol/kafka/kafka_data

env: null

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将kafka扩容到3副本

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

nebula:

data_path: /sysvol/nebula

graphd: {}

hosts:

- node-91-101

metad: {}

password: ffa3b5b81a1344ac202f078e

storaged: {}

opensearch:

config:

hanlpRemoteextDict: http://ecoconfig-private.anyshare:32128/api/ecoconfig/v1/word-list/remote_ext_dict

jvmOptions: -Xmx8g -Xms8g

data_path: /anyshare/opensearch

hosts:

- node-91-101

mode: master

resources:

limits:

cpu: "8"

memory: 40Gi

requests:

cpu: "1"

memory: 2Gi

settings:

action.auto_create_index: -company*,+*

bootstrap.memory_lock: false

cluster.routing.allocation.disk.watermark.flood_stage: 70%

cluster.routing.allocation.disk.watermark.high: 65%

cluster.routing.allocation.disk.watermark.low: 60%

prometheus:

data_path: /sysvol/prometheus

hosts:

- node-91-101

- node-91-102 # 增加node-91-102将prometheus扩容到2副本

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

proton_etcd:

data_path: /sysvol/proton-etcd/proton-etcd_data

hosts:

- node-91-101

proton_mariadb:

admin_passwd: eisoo.com123

admin_user: root

config:

innodb_buffer_pool_size: 8G

resource_limits_memory: 24G

resource_requests_memory: 24G

data_path: /sysvol/mariadb

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mariadb扩容到3副本

- node-91-103

proton_mongodb:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/mongodb/mongodb_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mongodb扩容到3副本

- node-91-103

proton_mq_nsq:

data_path: /sysvol/mq-nsq/mq-nsq_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mq-nsq扩容到3副本

- node-91-103

proton_policy_engine:

data_path: /sysvol/policy-engine/policy-engine_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-policy-engine扩容到3副本

- node-91-103

proton_redis:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/redis/redis_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-redis扩容到3副本

- node-91-103

resource_connect_info:

etcd:

hosts: proton-etcd.resource

port: 2379

secret: etcdssl-secret

source_type: internal

mongodb:

auth_source: anyshare

hosts: mongodb-mongodb-0.mongodb-mongodb.resource,mongodb-mongodb-1.mongodb-mongodb.resource,mongodb-mongodb-2.mongodb-mongodb.resource

# mongodb.hosts更新为多副本连接

password: eisoo.com123

port: 28000

replica_set: rs0

source_type: internal

username: anyshare

mq:

mq_hosts: proton-mq-nsq-nsqd.resource

mq_lookupd_hosts: proton-mq-nsq-nsqlookupd.resource

mq_lookupd_port: 4161

mq_port: 4151

mq_type: nsq

source_type: internal

opensearch:

hosts: opensearch-master.resource

password: eisoo.com123

port: 9200

protocol: http

source_type: internal

username: admin

version: 7.10.0

policy_engine:

hosts: proton-policy-engine-proton-policy-engine-cluster.resource

port: 9800

source_type: internal

rds:

hosts: mariadb-mariadb-master.resource

hosts_read: mariadb-mariadb-cluster.resource

password: eisoo.com123

port: 3330

port_read: 3330

rds_type: MariaDB

source_type: internal

username: anyshare

redis:

connect_type: sentinel

master_group_name: mymaster

password: eisoo.com123

sentinel_hosts: proton-redis-proton-redis-sentinel.resource

sentinel_password: eisoo.com123

sentinel_port: 26379

sentinel_username: root

source_type: internal

username: root

zookeeper:

data_path: /sysvol/zookeeper/zookeeper_data

env: null

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将zookeeper扩容到3副本

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

扩容结果确认

- 查看集群状态,三节点三master集群:kubectl get nodes

- 查看数据服务副本数及状态:kubectl get pod -n resource

proton-mariadb、proton-mongodb、proton-policy-engine、proton-mq-nsq、proton-redis、kafka、zookeeper均为三副本,且为running状态,(上述服务在进行数据同步过程中READY会显示为0/1,STATUS显示为Running,此时可继续进行后续操作,等待数据同步完成后READY会显示为1/1;处于Completed状态,READY显示为0/1的任务为正常现象)

- 查看集群配置:proton-cli get conf

配置文件中节点为三节点,proton-mariadb、proton-mongodb、proton-policy-engine、proton-mq-nsq、proton-redis、kafka、zookeeper均显示为三副本

7 三节点单master扩容到三节点三master

该场景下,原有环境集群节点为三节点,master节点为1个,所有服务为单副本

- 获取配置,执行:

proton-cli get conf > cluster.yaml - 编辑配置文件,编辑后保存(配置文件示例如下)

- 进行平台资源扩容,执行:

proton-cli apply -f cluster.yaml - 等待执行完成,确认扩容结果

# 注:

# 1、该配置文件仅为示例说明,最终以实际环境中获取到的配置为准,配置文件中不涉及修改的组件无需关注

# 2、配置文件中节点ip、内部ip(若未使用内部ip,无需加上internal_ip这行配置)、hostmname信息请根据实际环境填写

# 3、三节点单master环境中数据服务均为单副本,扩容到三节点三master后,服务副本数与全新部署的三节点环境保持一致(nebula除外)

# 4、各服务hosts列表中的节点名称必须包含在nodes列表内

# 5、opensearch不扩容,proton-etcd之后单独执行扩容

# 6、若获取到的配置文件中不存在grafana和prometheus,则忽略这两个组件的修改

apiVersion: v1

nodes:

- internal_ip: 11.0.0.4

ip4: 10.3.91.101

name: node-91-101

- internal_ip: 11.0.0.29

ip4: 10.3.91.102

name: node-91-102

- internal_ip: 11.0.0.30

ip4: 10.3.91.103

name: node-91-103

cms: {}

cr:

local:

ha_ports:

chartmuseum: 15001

cr_manager: 15002

registry: 15000

rpm: 15003

hosts:

- node-91-101

- node-91-102 # 新增node-91-102将容器仓库扩容到两节点

ports:

chartmuseum: 5001

cr_manager: 5002

registry: 5000

rpm: 5003

storage: /sysvol/proton_data/cr_data

cs:

addons:

- node-exporter

- kube-state-metrics

cs_controller_dir: ./service-package

docker_data_dir: /sysvol/proton_data/cs_docker_data

etcd_data_dir: /sysvol/proton_data/cs_etcd_data

ha_port: 16643

host_network:

bip: 172.33.0.1/16

pod_network_cidr: 192.169.0.0/16

service_cidr: 10.96.0.0/12

ipFamilies:

- IPv4

master:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将kubernetes扩容到3 master

- node-91-103

provisioner: local

installer_service: {}

grafana:

data_path: /sysvol/grafana

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

kafka:

data_path: /sysvol/kafka/kafka_data

env: null

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将kafka扩容到3副本

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

nebula:

data_path: /sysvol/nebula

graphd: {}

hosts:

- node-91-101

metad: {}

password: ffa3b5b81a1344ac202f078e

storaged: {}

opensearch:

config:

hanlpRemoteextDict: http://ecoconfig-private.anyshare:32128/api/ecoconfig/v1/word-list/remote_ext_dict

jvmOptions: -Xmx8g -Xms8g

data_path: /anyshare/opensearch

hosts:

- node-91-101

mode: master

resources:

limits:

cpu: "8"

memory: 40Gi

requests:

cpu: "1"

memory: 2Gi

settings:

action.auto_create_index: -company*,+*

bootstrap.memory_lock: false

cluster.routing.allocation.disk.watermark.flood_stage: 70%

cluster.routing.allocation.disk.watermark.high: 65%

cluster.routing.allocation.disk.watermark.low: 60%

prometheus:

data_path: /sysvol/prometheus

hosts:

- node-91-101

- node-91-102 # 增加node-91-102将prometheus扩容到2副本

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

proton_etcd:

data_path: /sysvol/proton-etcd/proton-etcd_data

hosts:

- node-91-101

proton_mariadb:

admin_passwd: eisoo.com123

admin_user: root

config:

innodb_buffer_pool_size: 8G

resource_limits_memory: 24G

resource_requests_memory: 24G

data_path: /sysvol/mariadb

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mariadb扩容到3副本

- node-91-103

proton_mongodb:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/mongodb/mongodb_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mongodb扩容到3副本

- node-91-103

proton_mq_nsq:

data_path: /sysvol/mq-nsq/mq-nsq_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-mq-nsq扩容到3副本

- node-91-103

proton_policy_engine:

data_path: /sysvol/policy-engine/policy-engine_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-policy-engine扩容到3副本

- node-91-103

proton_redis:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/redis/redis_data

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将proton-redis扩容到3副本

- node-91-103

resource_connect_info:

etcd:

hosts: proton-etcd.resource

port: 2379

secret: etcdssl-secret

source_type: internal

mongodb:

auth_source: anyshare

hosts: mongodb-mongodb-0.mongodb-mongodb.resource,mongodb-mongodb-1.mongodb-mongodb.resource,mongodb-mongodb-2.mongodb-mongodb.resource

# mongodb.hosts更新为多副本连接

password: eisoo.com123

port: 28000

replica_set: rs0

source_type: internal

username: anyshare

mq:

mq_hosts: proton-mq-nsq-nsqd.resource

mq_lookupd_hosts: proton-mq-nsq-nsqlookupd.resource

mq_lookupd_port: 4161

mq_port: 4151

mq_type: nsq

source_type: internal

opensearch:

hosts: opensearch-master.resource

password: eisoo.com123

port: 9200

protocol: http

source_type: internal

username: admin

version: 7.10.0

policy_engine:

hosts: proton-policy-engine-proton-policy-engine-cluster.resource

port: 9800

source_type: internal

rds:

hosts: mariadb-mariadb-master.resource

hosts_read: mariadb-mariadb-cluster.resource

password: eisoo.com123

port: 3330

port_read: 3330

rds_type: MariaDB

source_type: internal

username: anyshare

redis:

connect_type: sentinel

master_group_name: mymaster

password: eisoo.com123

sentinel_hosts: proton-redis-proton-redis-sentinel.resource

sentinel_password: eisoo.com123

sentinel_port: 26379

sentinel_username: root

source_type: internal

username: root

zookeeper:

data_path: /sysvol/zookeeper/zookeeper_data

env: null

hosts:

- node-91-101

- node-91-102 # 增加node-91-102及node-91-103将zookeeper扩容到3副本

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

扩容结果确认

- 查看集群状态,三节点三master集群:kubectl get nodes

- 查看数据服务副本数及状态:kubectl get pod -n resource

proton-mariadb、proton-mongodb、proton-policy-engine、proton-mq-nsq、proton-redis、kafka、zookeeper均为三副本,且为running状态,(上述服务在进行数据同步过程中READY会显示为0/1,STATUS显示为Running,此时可继续进行后续操作,等待数据同步完成后READY会显示为1/1;处于Completed状态,READY显示为0/1的任务为正常现象)

- 查看集群配置:proton-cli get conf

配置文件中节点为三节点,proton-mariadb、proton-mongodb、proton-policy-engine、proton-mq-nsq、proton-redis、kafka、zookeeper均显示为三副本

8 三节点三master扩容到三节点以上

该场景下,原有环境集群节点为三节点,master节点为3个,cr服务为2个,opensearch为单副本,其他服务均为三副本,此时扩容,仅需要新增kubernetes的worker节点,master节点及数据服务无需再进行扩容

- 获取配置,执行:

proton-cli get conf > cluster.yaml - 编辑配置文件,编辑后保存(配置文件示例如下)

- 进行平台资源扩容,执行:

proton-cli apply -f cluster.yaml - 等待执行完成,确认扩容结果

# 注:

# 1、该配置文件仅为示例说明,最终以实际环境中获取到的配置为准,配置文件中不涉及修改的组件无需关注

# 2、配置文件中节点ip、内部ip(若未使用内部ip,无需加上internal_ip这行配置)、hostmname信息请根据实际环境填写

# 3、三节点三master扩容到三节点以上仅需扩容kubernetes的worker节点

apiVersion: v1

nodes:

- internal_ip: 11.0.0.4

ip4: 10.3.91.101

name: node-91-101

- internal_ip: 11.0.0.29

ip4: 10.3.91.102

name: node-91-102

- internal_ip: 11.0.0.30

ip4: 10.3.91.103

name: node-91-103

- internal_ip: 11.0.0.31 # 新增节点,填写内部ip、节点ip、hostname信息;若需增加多个节点,在nodes列表中依次添加即可

ip4: 10.3.91.104

name: node-91-104

cms: {}

cr:

local:

ha_ports:

chartmuseum: 15001

cr_manager: 15002

registry: 15000

rpm: 15003

hosts:

- node-91-101

- node-91-102

ports:

chartmuseum: 5001

cr_manager: 5002

registry: 5000

rpm: 5003

storage: /sysvol/proton_data/cr_data

cs:

addons:

- node-exporter

- kube-state-metrics

cs_controller_dir: ./service-package

docker_data_dir: /sysvol/proton_data/cs_docker_data

etcd_data_dir: /sysvol/proton_data/cs_etcd_data

ha_port: 16643

host_network:

bip: 172.33.0.1/16

pod_network_cidr: 192.169.0.0/16

service_cidr: 10.96.0.0/12

ipFamilies:

- IPv4

master:

- node-91-101

- node-91-102

- node-91-103

provisioner: local

installer_service: {}

grafana:

data_path: /sysvol/grafana

hosts:

- node-91-101

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

kafka:

data_path: /sysvol/kafka/kafka_data

env: null

hosts:

- node-91-101

- node-91-102

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

nebula:

data_path: /sysvol/nebula

graphd: {}

hosts:

- node-91-101

metad: {}

password: ffa3b5b81a1344ac202f078e

storaged: {}

opensearch:

config:

hanlpRemoteextDict: http://ecoconfig-private.anyshare:32128/api/ecoconfig/v1/word-list/remote_ext_dict

jvmOptions: -Xmx8g -Xms8g

data_path: /anyshare/opensearch

hosts:

- node-91-101

mode: master

resources:

limits:

cpu: "8"

memory: 40Gi

requests:

cpu: "1"

memory: 2Gi

settings:

action.auto_create_index: -company*,+*

bootstrap.memory_lock: false

cluster.routing.allocation.disk.watermark.flood_stage: 70%

cluster.routing.allocation.disk.watermark.high: 65%

cluster.routing.allocation.disk.watermark.low: 60%

prometheus:

data_path: /sysvol/prometheus

hosts:

- node-91-101

- node-91-102

resources:

limits:

cpu: "1"

memory: 3Gi

requests:

cpu: 500m

memory: 2Gi

proton_etcd:

data_path: /sysvol/proton-etcd/proton-etcd_data

hosts:

- node-91-101

- node-91-102

- node-91-103

proton_mariadb:

admin_passwd: eisoo.com123

admin_user: root

config:

innodb_buffer_pool_size: 8G

resource_limits_memory: 24G

resource_requests_memory: 24G

data_path: /sysvol/mariadb

hosts:

- node-91-101

- node-91-102

- node-91-103

proton_mongodb:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/mongodb/mongodb_data

hosts:

- node-91-101

- node-91-102

- node-91-103

proton_mq_nsq:

data_path: /sysvol/mq-nsq/mq-nsq_data

hosts:

- node-91-101

- node-91-102

- node-91-103

proton_policy_engine:

data_path: /sysvol/policy-engine/policy-engine_data

hosts:

- node-91-101

- node-91-102

- node-91-103

proton_redis:

admin_passwd: eisoo.com123

admin_user: root

data_path: /sysvol/redis/redis_data

hosts:

- node-91-101

- node-91-102

- node-91-103

resource_connect_info:

etcd:

hosts: proton-etcd.resource

port: 2379

secret: etcdssl-secret

source_type: internal

mongodb:

auth_source: anyshare

hosts: mongodb-mongodb-0.mongodb-mongodb.resource,mongodb-mongodb-1.mongodb-mongodb.resource,mongodb-mongodb-2.mongodb-mongodb.resource

password: eisoo.com123

port: 28000

replica_set: rs0

source_type: internal

username: anyshare

mq:

mq_hosts: proton-mq-nsq-nsqd.resource

mq_lookupd_hosts: proton-mq-nsq-nsqlookupd.resource

mq_lookupd_port: 4161

mq_port: 4151

mq_type: nsq

source_type: internal

opensearch:

hosts: opensearch-master.resource

password: eisoo.com123

port: 9200

protocol: http

source_type: internal

username: admin

version: 7.10.0

policy_engine:

hosts: proton-policy-engine-proton-policy-engine-cluster.resource

port: 9800

source_type: internal

rds:

hosts: mariadb-mariadb-master.resource

hosts_read: mariadb-mariadb-cluster.resource

password: eisoo.com123

port: 3330

port_read: 3330

rds_type: MariaDB

source_type: internal

username: anyshare

redis:

connect_type: sentinel

master_group_name: mymaster

password: eisoo.com123

sentinel_hosts: proton-redis-proton-redis-sentinel.resource

sentinel_password: eisoo.com123

sentinel_port: 26379

sentinel_username: root

source_type: internal

username: root

zookeeper:

data_path: /sysvol/zookeeper/zookeeper_data

env: null

hosts:

- node-91-101

- node-91-102

- node-91-103

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 500m

memory: 1Gi

扩容结果确认

查看集群状态:kubectl get nodes

9 Proton-etcd 扩容

该章节仅需要在单节点扩展到三节点、两节点扩展到三节点、三节点单master扩展到三节点三master的场景下执行

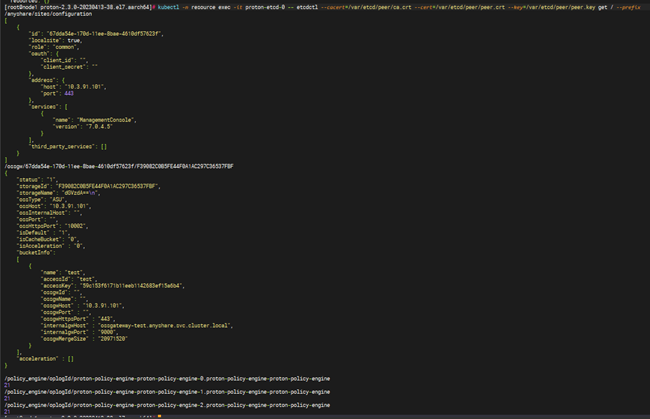

第1步 查看proton-etcd数据,执行:kubectl -n resource exec -it proton-etcd-0 -- etcdctl --cacert=/var/etcd/peer/ca.crt --cert=/var/etcd/peer/peer.crt --key=/var/etcd/peer/peer.key get / --prefix

第2步 获取proton-etcd配置,执行:helm3 get values proton-etcd -n resource > proton-etcd.yaml

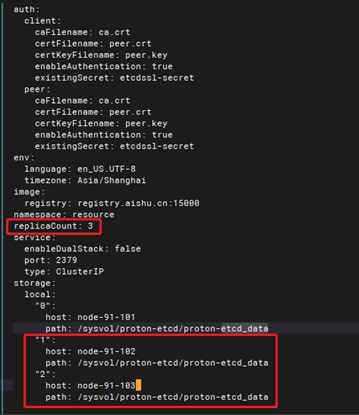

第3步 编辑proton-etcd配置文件,修改副本数和节点信息

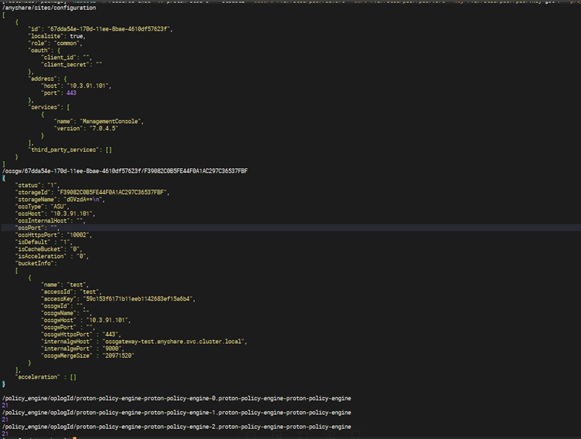

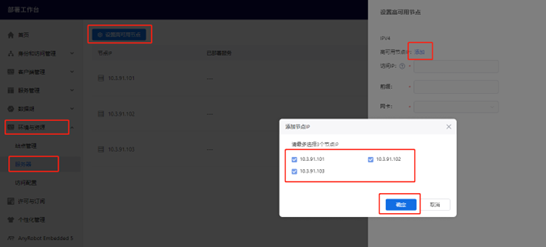

副本数修改为3,添加storage.local中1和2,host请根据环境实际情况填写,1和2中的path请与0保持一致,如图所示:

第4步 上传proton-etcd扩容工具到服务器(请根据服务器架构下载对应的脚本)脚本路径如下:

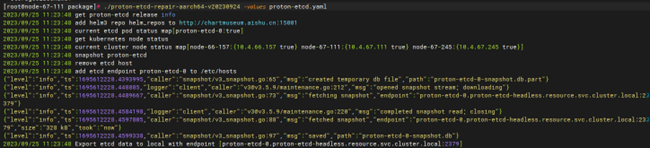

第5步 给脚本赋予执行权限:chmod +x ./proton-etcd-repair-aarch64-v20230925(请注意使用对应架构脚本)

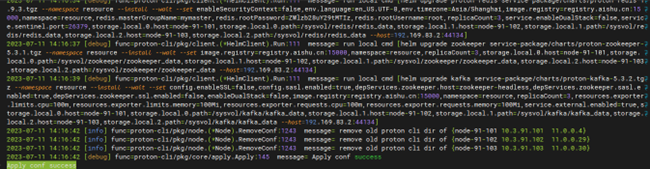

第6步 执行proton-etcd扩容:./proton-etcd-repair-aarch64-v20230925 -values proton-etcd.yaml(请注意使用对应架构脚本),等待扩容完成

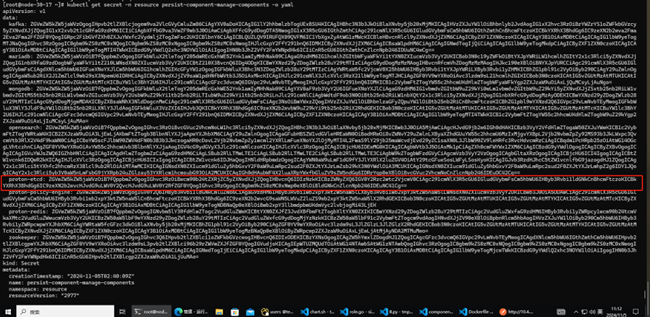

第7步 执行kubectl edit secret -n resource persist-component-manage-components,复制下面圈起来的内容

使用上面复制出来的内容执行下面截图命令

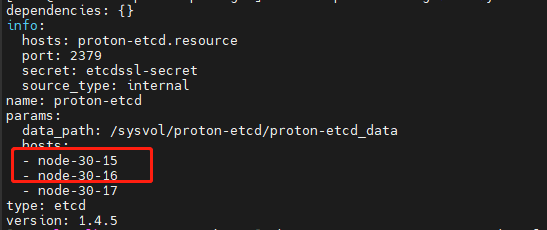

编辑文件vi component-manage.etcd.yaml,将被扩容的节点添加到该配置文件,注意:添加节点的顺序要和proton-cli get conf 获取到etcd节点顺序一致,如下截图:

文件编辑完成后执行下面截图里命令进行base64编码

复制上一步的base64编码,替换kubectl edit secret -n resource persist-component-manage-components命令获取的proton-etcd字段值,保存后退出

第7步 更新proton-policy-engine中proton-etcd的endpoints,依次执行:proton-cli get conf > cluster.yaml;proton-cli apply -f cluster.yaml

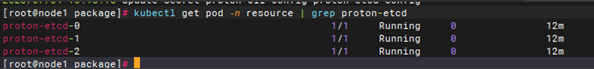

第9步 确认proton-etcd副本数及运行状态,执行:kubectl get pod -n resource | grep proton-etcd

proton-etcd为三副本,且为running状态,如图所示:

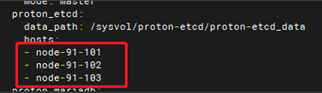

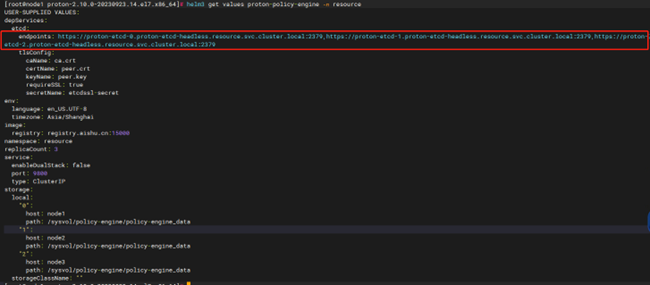

第10步 确认proton-etcd配置,执行:proton-cli get conf

配置文件中proton-etcd应显示为三副本,如图:

第11步 确认proton-etcd数据,执行:kubectl -n resource exec -it proton-etcd-1 -- etcdctl --cacert=/var/etcd/peer/ca.crt --cert=/var/etcd/peer/peer.crt --key=/var/etcd/peer/peer.key get / --prefix

获取到的数据与扩容前的数据应保持一致

第12步 确认proton-policy-engine中proton-etcd的endpoints,执行:helm3 get values proton-policy-engine -n resource,proton-policy-engine配置中etcd.endpoints应包含proton-etcd-0、proton-etcd-1和proton-etcd-2

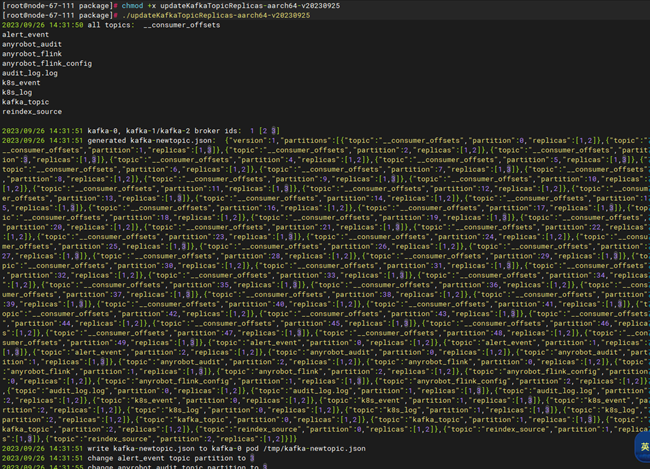

10 修改kafka数据副本

该章节仅需要在单节点扩展到三节点、两节点扩展到三节点、三节点单master扩展到三节点三master的场景下执行,kafka的pod已被扩容为3副本

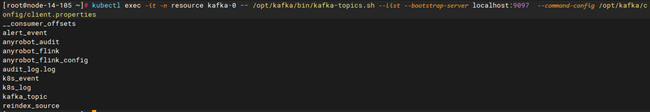

第1步 查看当前topic:kubectl exec -it -n resource kafka-0 -- /opt/kafka/bin/kafka-topics.sh --list --bootstrap-server localhost:9097 --command-config /opt/kafka/config/client.properties

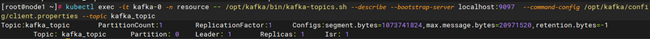

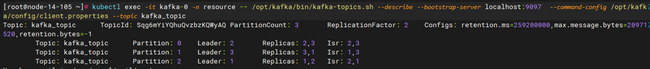

第2步 查看已存在topic的分区数和副本数:kubectl exec -it kafka-0 -n resource -- /opt/kafka/bin/kafka-topics.sh --describe --bootstrap-server localhost:9097 --command-config /opt/kafka/config/client.properties --topic kafka_topic(以查看topic:kafka_topic的分区数和副本数为例),此时topic的分区数和副本数均为1

第3步 修改已存在topic的分区数和副本数,上传修改工具到服务器,修改脚本权限,执行修改(请使用对应架构脚本),脚本目录如下:

修改脚本权限:chmod +x updateKafkaTopicReplicas-amd64-v20230925

执行修改:./updateKafkaTopicReplicas-amd64-v20230925

第4步 修改后查看已存在topic的分区数和副本数,此时topic的分区数为3,副本数为2(以查看topic:kafka_topic的分区数和副本数为例)

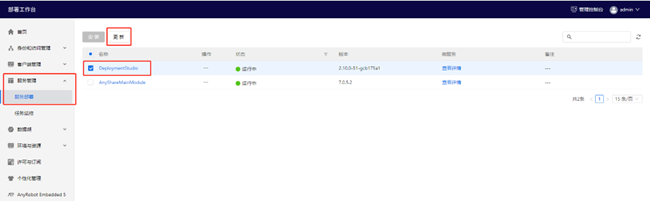

11 部署工作台扩容

该章节仅需要在单节点扩展到三节点、两节点扩展到三节点、三节点单master扩展到三节点三master的场景下执行

注意:部署工作台更新时会同步更新依赖MongoDB的相关服务的MongoDB连接信息

第1步 更新部署工作台,登录部署工作台,进入【服务管理】--【服务部署】,选中DeploymentStudio,点击【更新】

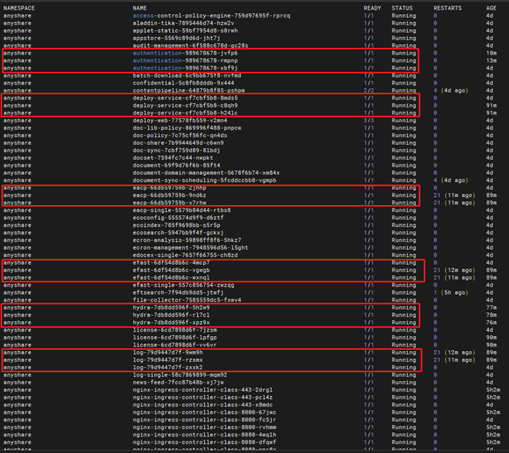

第2步 选中当前版本,点击【下一步】,填写配置项,将服务副本数改为3,点击【SUBMIT】,点击【下一步】,点击【确定】,等待更新完成

第3步 确认部署工作台扩容结果,执行:kubectl get pod -n anyshare,服务为三副本且处于正常运行状态

12 配置高可用

若当前环境已配置过高可用,可跳过该章节

第1步 登录部署工作台

第2步 进入【环境与资源】-- 【服务器】,点击【设置高可用节点】-- 点击【添加】,选中节点,点击确定

第3步 填写访问ip,前缀及网卡,点击【确定】

第4步 更新访问配置,进入【环境与资源】-- 【访问配置】,点击【修改访问配置】,将访问地址修改为第3步设置好的访问ip(也可设置为域名,设置为域名时,需要配置好域名与访问ip的解析规则),点击【保存】

第5步 更新证书,进入【环境与资源】-- 【访问配置】,点击【配置证书】-- 点击【生成自签名证书】